Collaborative computing, pioneered at UW–Madison, helped drive LHC analysis

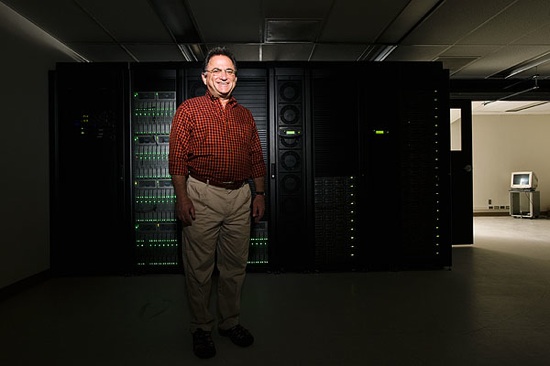

Miron Livny is pictured near an enclosed bank of distributed computing equipment in the Computer Sciences and Statistics building. Livny specializes in distributed computing, which pools the computing power of thousands of processors to conduct number crunching at a huge scale.

Photo: Jeff Miller

When scientists at the Large Hadron Collider in Europe announced the appearance of a new particle among the pieces of smashed protons, Miron Livny saw a huge scientific success.

But the University of Wisconsin–Madison computer scientist reveled in more than a fascinating research finding.

“It’s also a huge triumph for mankind,” says Livny, chief technology officer at the Wisconsin Institutes for Discovery. “There were more than 40 nations that came together for a long time to do this one thing that — even if it all worked out — wasn’t going to make anyone rich. It’s a powerful demonstration of the spirit of collaboration.”

Collaboration has been guiding Livny’s work for decades, and it is a large part of UW–Madison’s contribution to the search for the Higgs boson.

Whenever a physicist in the United States wants to sift the avalanche of data collected at the LHC, they tap Open Science Grid, a network of computing resources for which Livny serves as principal investigator.

Open Science Grid services knit together researchers, many repositories of LHC data (UW–Madison is home to two research teams, one each for the two biggest experiments at LHC) and more than 100,000 computers at about 80 sites around the country.

“The detectors at the LHC are really huge, high-tech cameras. Only they aren’t just looking at light. They’re capturing all sorts of energy,” says Dan Bradley, a University of Wisconsin–Madison software programmer working on LHC’s Compact Muon Solenoid (CMS) experiment. “There is so much to keep track of at once that a lot of custom electronics had to be designed to catch it all.”

Specially designed computers like the “trigger,” developed for CMS by a team led by UW–Madison physicist Wesley Smith, decide in the lighting-quick aftermath of the collision of protons whether the event is worthy of further study.

“The vision was to get the data into the hands of researchers at universities around the world, because the eyes, the brains, the innovation is with the faculty, postdocs and students at the universities.”

Miron Livny

Worthy data is dumped into the computing center adjacent to the collider in Europe. That data is handed off via the worldwide LHC Computing Grid to national sites around the world, including FermiLab in the Chicago suburb of Batavia, Ill.

National Tier 1 sites like FermiLab do the first-blush analysis of the data, identifying particles — which, in the bizarre world of quantum mechanics, aren’t even necessarily parts of the crashing protons — that tumbled away from the recorded collisions, and hand the data off again to Tier 2 sites like UW–Madison.

“You can’t see a Higgs boson. You know it’s there by watching the tracks and energy deposits of particles produced when it decays,” says Bradley. “So physicists looking at collider data want to see everything of a type — say, every instance when two high-energy photons were produced.”

On Open Science Grid, retrieval and analysis of all those similar events within the flash of a collision is done across a pool of shared computer resources similar to the pools Livny and computing researchers in Madison have been filling since the mid-1980s with their widely used Condor High Throughput Computing Technologies.

“When someone has work to get done, they do not worry about whether it’s running at Purdue or UC-San Diego or in Madison,” says Alain Roy, Open Science Grid software coordinator and member of the UW–Madison’s Center for High Throughput Computing. “They don’t even know. They submit their work, and it is routed to an appropriate computer somewhere in OSG.”

At times, LHC researchers are making use of two-thirds of the roughly 2 million hours of computing time Open Science Grid makes available on a daily basis. That computing power is also collected from computers already working on other research projects.

“Sometimes the LHC jobs will be running here,” says Bradley, referring to computers in UW–Madison’s Physics Department. “Sometimes they’ll be running at IceCube (a Madison-based neutrino experiment) or over at Computer Sciences. The data is flowing around campus depending on what’s available, or even out to other campuses.”

Open Science Grid allows the U.S. scientists in the LHC experiments to be both more efficient and more collaborative.

“LHC experiments like CMS and ATLAS are big enough that they could built separate computing infrastructures,” Roy says. “Instead, we’ve done it once for the U.S. portions of both experiments. That saves resources and shares expertise.”

The flow of data mirrors the movement of software, hardware and know-how on UW–Madison’s campus, which contributed to the LHC effort Livny’s pioneering computing, Smith’s CMS work and physicist Sau Lan Wu’s service on the ATLAS experiment at LHC.

“The reason we are so active in the LHC is because of our on-campus collaboration between physics and computing,” Livny says. “Open Science Grid is all about collaboration, sharing, working together. That’s the underlying concept that we pioneered in the Condor work. You let me use your workstation when you aren’t. I let you use mine when I’m not. We all benefit.”

Similar shared computing resources are working with LHC data around the world, putting the fruits of the most powerful particle collider ever built close at hand to thousands of researchers.

“The vision was to get the data into the hands of researchers at universities around the world, because the eyes, the brains, the innovation is with the faculty, postdocs and students at the universities,” Livny says. “That’s how you get the best result, big results like they’ve gotten at LHC.”